Starting this week our Face Detection and Recognition service supports two more advanced attributes: lips and eyes, further advancing the SkyBiometry API. These attributes enable even more scenarios, such as checking that person’s eyes are open in the photo or the mouth is shut during submitted photo filtering for example. This does not detract from the fact, of course, that facial recognition technology can still recognize and identify an individual when the eyes are closed – you can read more here. Also quality of existing attributes determination is significantly improved. All attribute values are returned along with confidence in the range 0-100%. In the case attribute value cannot be reliably determined it is not returned at all, as we do not want to confuse you with the noisy results.

Starting this week our Face Detection and Recognition service supports two more advanced attributes: lips and eyes, further advancing the SkyBiometry API. These attributes enable even more scenarios, such as checking that person’s eyes are open in the photo or the mouth is shut during submitted photo filtering for example. This does not detract from the fact, of course, that facial recognition technology can still recognize and identify an individual when the eyes are closed – you can read more here. Also quality of existing attributes determination is significantly improved. All attribute values are returned along with confidence in the range 0-100%. In the case attribute value cannot be reliably determined it is not returned at all, as we do not want to confuse you with the noisy results.

All currently supported attributes along with possible values are summarized in the table below:

| Attribute | Values |

|---|---|

| gender | male, female |

| glasses | true, false |

| dark_glasses | true, false |

| smiling | true, false |

| lips | sealed, parted |

| eyes | open, closed |

And more are coming in the future. Please use our user voice page to influence which ones come first!

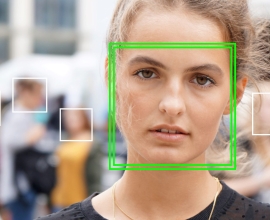

Another addition to the service is detect_all_feature_points parameter. If set to true when calling faces/detect or other method then the response will contain up to 68 points in addition to always returned left eye center, right eye center, nose tip and mouth center points. Each point has a confidence specified in the range 0-100% and an id. For additional points id has the following format: 0x03NN where NN is a point number. Each number is linked to a specific point on the face as you can see in our demo. Just check the “Detect all feature points” checkbox before clicking Submit or a picture.

If you are using our C# wrapper then you specify the parameter in additionalArgs argument:

FCResult result = await client.Faces.DetectAsync(

new string[] { url }, null,

Detector.Default, Attributes.Default,

new KeyValuePair<string, object>[] {

new KeyValuePair<string, object>(

“detect_all_feature_points”, true) }});

Also we have greatly updated out documentation to include information about returned response object fields and possible error codes.